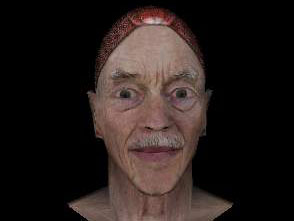

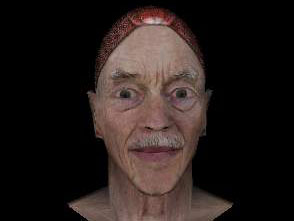

figure 1

We used 3D, realistic-appearing, “talking head” provided by the Haptek Corp. (see figure 1) to build our anthropomorphic interface agents. We established an experimental environment in which the experimenter could see and hear the responses of participants through a microphone and camera. A network system also allowed the experimenter to remotely control the talking head, which appeared on the subject screen. There were several aspects of the talking head that could be controlled, including appearance, style and the speech synthesizer it used.

figure 1

Our first experiment manipulated the agent fidelity and the task objectiveness. The two tasks were a travel task and an editing task and animated, stiff or iconic faces were used in the experiment. The travel task was chosen to be a type of creative, opinion-based task in which interacting with an agent might be viewed as an opportunity to think more deeply about the task by discussing points of view about the importance of travel items. The editing task was chosen to represent an opportunity to use an agent primarily as a reference source rather than as a guide or teacher. Usefulness was evaluated via both the performance and satisfaction dimensions. The result of the experiment showed that the perception of the agent was strongly influenced by the task while features of the agent (appearance in this case) that we manipulated had little effect. Details could be found on our CogSci'2002 publication.

The second experiment was designed to examine the effect of an interface assistant’s initiative on people’s perception of the assistant and their task performance and to compare performance to a traditional manual. A secondary goal was to examine whether a user's personality plays a role in her or her reactions to reactive and proactive agents. An editing task was designed to represent an opportunity to use an interface assistant primarily as a replacement for online manual. Anecdotal evidence (Clippy) suggested that proactive assistant behavior would not enhance performance and would be viewed as intrusive. Our results show that all three conditions performed similarly on objective editing performance (completion time, commands issued, and command recall), while the participants in the latter two conditions strongly felt that the assistant's help was valuable. Details could be found on our INTERACT'03 publication.

In the third experiment, we examined the role of competence of an agent in a text-editing task environment like that of the previous study. The ECAs in this study would respond to participants' spoken questions as well as make proactive suggestions using a synthesized voice. However, the ECAs varied in the quality of responses and suggestions made. Results revealed that the perceived utility of the ECA was influenced by the types of errors made, while participants' subjective impressions of the ECA related to the perceptions of its embodiment. In addition, allowing participants to choose their preferred assistance styles (ECA vs. online-help) improved objective performance. Details could be found on our AAMAS'04 publication.

In the final experiment, we examined the role of choice and customization. Experiment conditions varied with respect to whether participants were allowed to customize their agent and the perceived quality or appropriateness of the agent for the tasks. For both tasks, the illusion of customization significantly improved participants’ overall subjective impressions of the ECAs while perceived quality had little or no effect. Additionally, performance data revealed that the ECA’s motivation and persuasion effects were significantly enhanced when participants chose agents to use. We found that user expectations about and perceptions of the interaction between themselves and an ECA depended very much on the individual’s preconceived notions and preferences of various ECA characteristics and may deviate greatly from the models that ECA designers intend to portray. Details could be found on our CHI'07 publication and my Ph.D. dissertation.

![]()