Extracting Information from Prepared Slides

The ZenPad electronic whiteboard, located in Classroom 2000, supports a lecturer’s ability to display and annotate notes on top of a prepared presentation. These slides are typically PowerPoint presentations that have been converted into a collection of GIF or JPEG images. It is the static GIF or JPEG images that are then loaded into the Zen* system.

After lectures, there is important information that we want to have extracted from each of the GIF or JPEG images. By extracting the text that is on each slide, we can generalize as to what the lecturer was discussing when a particular slide was presented. By determining where the text is on a particular slide, we can determine what the focus of attention is on each slide. For example, when the lecturer circles a word, we can assume that word has something to do with the subject or matter they are discussing at that point in time. Finally, from extracting the textual and spatial information of each slide, we can also gather some structural information about the composition of each slide (such as title and diagram location).

The purpose of this research is to delve into possible ways that this text/ information extraction can be performed using optical character recognition (OCR) techniques. Although fairly accurate OCR systems exist, we assume that the backgrounds used in Classroom 2000 presentations are non-uniform, and that often times the text may be embedded within images, thus making the accuracy of current systems inefficient. In our system, we are typically only trying to recognize character belonging to the 62 classes: [0-9], [a-z] and [A-Z]. Since annotations can be made to the slides during a presentation, it is also our goal to recognize when a lecturer is putting emphasis on a particular topic (i.e.- circling words, inserting asterisks, underlining topics, etc.). We will attempt to derive summaries of the slides presented by combining OCR technology and lecture annotations even when text is not the dominating element shown.

How Imaging Leads to Optical Character Recognition (OCR)?

According to the National Library of Canada, digitization usually refers to the process of converting a paper- or film-based document into electronic form bit by bit. This electronic conversion is accomplished through a process called imaging whereby a document is scanned and an electronic representation of the original is produced. Using a scanner, the imaging process involves recording changes in light intensity reflected from the document as a matrix of dots. The light/ color values of each dot is stored in binary digits, one bit being required for each dot in a black/white scan and up to 32 bits for a color scan [1]. Optical character recognition (OCR) takes this data one step further by converting this electronic data, originally a bitmap, into machine-readable, editable text.

Problems with OCR

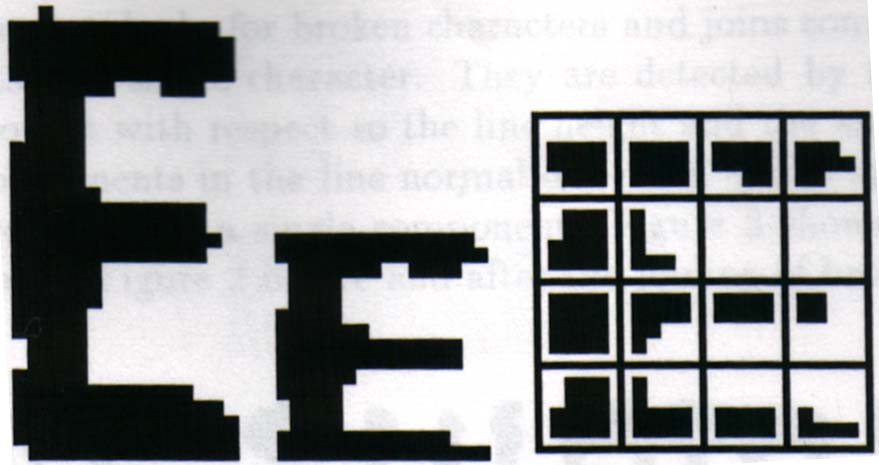

Optical character recognition currently has applications in areas such as document indexing and sorting, forms processing and digital document conversion. The current systems would have unlimited possibilities if they could overcome obstacles such as recognizing text that is embedded, uses varying fonts, irregular backgrounds and varying dimensions. The most precise systems claim an accuracy ratings of 99%4, but this number does not apply to the documents we are trying to OCR. Instead, they are recognizing pages that are fairly uniform in that the text is of the same size and font, a first-generation document is being used (as opposed to a fax, copy, etc.) and no backgrounds are included. Lines, logos and graphics are also unfavorable toward the accuracy of an OCRed document5. In these cases, an accurate system can claim rating of 80-85%, which may seem precise but actually translates to more than one error every two words.

Current OCR techniques

Although optical character recognition is performed using various techniques, five basic steps typically do not change. These include pre-processing, image segmentation, pattern classification, correction and post-processing.

Pattern Classification Methods

Feature extraction

Feature extraction is the most common method of character recognition. This technique identifies a character by analyzing its shape and comparing its features against a set of rules that distinguishes each character. First the recognized character is normalized to a constant size and the resulting character is then compared to standard characters located in a database. There are then various techniques used to perform the actual feature extraction on the characters.

Early OCR systems used an overlay method. With overlay, the scanned character is placed on top of (hence overlay) database characters in search of a "perfect match". This technique worked in some cases but does a poor job when Italics, poor quality documents, or different fonts are used.

The differentiation concept has been widely used in Japanese text. Here, after normalization, each character is broken down into its vector features and differentiated into four directions. The four vector features are then compressed into a 7x7-cell grid to minimize computing resources. At this point, each character has been converted into a set of 196 (7x7x4) characteristic values. This technique seems to provide some advantages to other methods, but Japanese font is much more distinguished that ASCII. The lines composing its characters are typically horizontal, vertical, or at 45o angles, contrary to the curves typically found in ASCII. [6]

The optical character recognizer approach is based on feature extraction coupled with the application of the bayesian classifier. Here, the normalized character is partitioned into regions of size 4x4. Each region is coded to produce a feature vector with 16 elements.

Best-Fit Algorithms

Most modern OCR systems use algorithms to find characters that provide a "good fit" to the one scanned. Some of the more common ones are the bayesian classifier, the generalized Levenshtein distance formula and the Euclidean method. Usually, the distance from the normalized character is calculated using these methods and the character producing the most hits (pixel by pixel) is determined to be the "best fit" character. In case of errors, it is important to also keep characters that are very close to the best-fit value. Some characters such as o and c, are confusing to the best-fit techniques. The o value will always produce a higher number of hits than the c value, causing the system to believe that o is the recognized character.

SRI International has recently developed OCR techniques that eliminate character segmentation, the most error prone step of recognition. They use the features that identify a character (e.g., the dot over an i or the descender underneath a y) also serve to locate that character within a line of text. The technique (based on the machine vision domain of occluded object recognition) obtains a consensus of generic character features. This consensus enables simultaneous determination of both the identity and the location of each character on the page. This approach is robust even in the presence of the image noise that results from the various sources of degradation including repeated photocopy and poor quality originals. The results of this identify-and-locate step are cross-checked against entries in a dictionary to further enhance the accuracy of the output. [7]

OCR and Images

This section will develop into the problems and solutions of images and OCR. Current OCR systems

There are many applications in which the automatic detection and recognition of text embedded in images is useful. Current OCR and other document recognition technology cannot handle these situations well. In the paper I am about to read, a system that automatically detects and extracts text in images is proposed. This system consists of four phases. First, by treating text as a distinctive texture, a texture segmentation scheme is used to focus attention on regions where it may occur. Second, strokes are extracted from the segmented text regions. Using reasonable heuristics on text strings, such as height similarity, spacing and alignment, the extracted strokes are then processed to form tight rectangular bounding boxes around the corresponding text strings. To detect text over a wide range of font sizes, the above steps are first applied to a pyramid of images generated from the input image, and then the boxes formed at each resolution of the pyramid are fused at the original resolution. Third, an algorithm which cleans up the background and binarizes the detected text is applied to extract the text from the regions enclosed by the bounding boxes in the input image . Finally, text bounding boxes are refined (re-generated) by using the extracted items as strokes. These new boxes usually bound text strings better. The clean-up and binarization process is then carried out on the regions in the input image bounded by the boxes to extract cleaner text. The extracted text can then be passed through a commercial OCR engine for recognition if the text is of an OCR-recognizable font. Experimental results show that the algorithms work well on images from a wide variety of sources, including newspapers, magazines, printed advertisements, photographs, digitized video frames, and checks. The system is also stable and robust---the same system parameters work for all the experiments.[8]

This is the actual abstract from a paper that I have yet to analyze. Hence, work in progress.

Conclusion

Humans tend to read documents using word-shaping techniques. Instead of concentrating on individual characters, people tend to look at entire words or even word phrases. Words have more features than isolated characters, making their recognition potentially faster and more reliable than individual characters in the presence of image noise. Therefore it is doubtful that character recognition by computers will ever meet or exceed the level at which humans perform it. Nevertheless, the speed that computers read characters is a quality that will make optical character recognition a long-lived topic.

My proposal for future work

I plan to continue research in this area through cs8503 with Professor Gregory Abowd during the Winter quarter. Hopefully, I can develop applications that perform recognition at an adequate level of the slides presented in Classroom 2000.

References

1. Haigh, Susan. Optical character recognition (OCR) as a digitization technology. Network Notes #37. November 15, 1996.

2. Thompson, Chris. OCR/ICR Accuracy and Acceptance- What does it mean? Inform magazine. July 1997.

3

More recent technology is Intelligent Character Recognition, which employs sophisticated Artificial Intelligence-based recognition algorithms that can learn to recognize non-standard fonts and character styles. This technology is achieving increasing success but is focused in handprint recognition applications such as forms digitization. Handwriting recognition remains largely unsuccessful.4

Accuracy = (# of correct character)/ (total # of characters) *1005

For the purpose of this research, a lecturer’s handwriting also needs to be recognized. "Handwriting (not handprint) is considered to be the most difficult character recognition task…with an accuracy rating around 50%." [2] Therefore for our purposes we will focus on acknowledging that handwriting exists on a slide, but we will leave the task of recognizing the actual handwritten characters as future work.36. OCR Technology Overview as presented by the Media Drive Corporation. URL: http://www.mediadrive.co.jp/english/how/what_OCR/overview.html

7. Advanced Automation Technology Center. Advanced character recognition techniques. URL: http://www.erg.sri.com/div-services/aatc/index.html

8. V. Wu, R. Manmatha and E. M. Riseman. Finding Text in Images. UM-CS-1997-009. February, 1997. URL: http://www.cs.umass.edu/Dienst/UI/2.0/Describe/ncstrl.umassa_cs%2FUM-CS-1997-009