Project 2: Local Feature Matching

Notre Dame Example Using Cheat Points

The goal of this project was to implement a feature detection and matching program to find a match features between two images. This is very useful to create panoramas, or determine if two images are a picture of the same thing. The pipeline can be broken down in to three simple parts:

- Harris Corner Detector

- SIFT Feature Descriptors

- Feature Match

Harris Corner Detector

With the Harris Corner Dectector corners can be identified as an area where shifting the image in any direction changes the area greatly. We create the Harris Response by taking the derivative of the image with respect to both the x and y directions, which gives us Ix and Iy Using these we can create derivative products Ixx and Iyy Ixy These three matrices, which are the size of the original image give us the gradient response in the x direction, the y direction, and in the diagonal direction. The Harris Response can then be computed as: HR = (Ixx * Iyy) - (Ixy2) - (alpha*((Ixx+Iyy)2))

We can then take this response and threshold it by some amount, in order to suppress corners with a very low corner score. After this we perform non-maximum suppresion, which will ensure the returned corners are the maximum corners in a certain window.

SIFT Feature Descriptors

SIFT descriptors are created by examing a patch of pixels around a key point. The patch, which is 16x16 pixels, is then divided into 16 4x4 cells. Each of these cells create a histogram of oriented gradients. Each pixel in the cell 'votes' for one of 8 orientation based on its magnitude. Each of the 16 histograms are concatenated to create a 128 wide SIFT descriptor. Each SIFT feature is normalized, then clamped to 0.2, then renormalized. This ensures that no pixels has too much weight in the histogram.

Feature Match

With two lists of features, we next need to find which feature in image 1 matches to which feature in image 2. The simplest method to do so is called the Nearest Neighbor Distance Ratio. For every feature in image 1 we find the Euclidean distance to each feature in image 2. The match for the feature from image 1 is to the feature image 2 with the smallest distance. The confidence of the match can be described as 1/(NN1/NN2), where NN1 and NN2 are the distances to the closest neighbor and second closest neighbor. Sorting the matches by confidence and then taking the top 100 gives us the top 100 matches.

Code Snippets

Harris Corner Detector

% Image derivatives

[Ix Iy] = imgradientxy(image);

% Blur derivatives

Ix = imgaussfilt(Ix, blurSigma);

Iy = imgaussfilt(Iy, blurSigma);

% Derivative products

Ixx = Ix.^2;

Iyy = Iy.^2;

Ixy = Ix.*Iy;

% Blur derivative products

Ixx = imgaussfilt(Ixx, blurSigma);

Iyy = imgaussfilt(Iyy, blurSigma);

Ixy = imgaussfilt(Ixy, blurSigma);

% Harris response matrix

harrisResponse = (Ixx.*Iyy) - (Ixy.^2) - (alpha*((Ixx+Iyy).^2));

% Threshold and non maximal suppression

threshold = 0.1*max(harrisResponse(:));

maxFilter = colfilt(harrisResponse, [maximaSize,maximaSize], 'sliding', @max);

corners = (harrisResponse==maxFilter)&(harrisResponse>threshold);

SIFT Feature Descriptors

% Cut out patch

imagePatch = image(rowTop:rowBot,colLeft:colRight);

% Get dir and mag of keypoint

[imagePatchGradMag, imagePatchGradDir] = imgradient(imagePatch);

% Hold the current feature

feature = [];

% Get gradient mag and dir

[imagePatchGradMag, imagePatchGradDir] = imgradient(imagePatch);

% Get each cell

for cellRow=0:3

for cellColumn=0:3

% Cut out gradient mag/dir of cell

gradMag = imagePatchGradMag((cellRow*cellDimension)+1:((cellRow+1)*cellDimension),

(cellColumn*cellDimension)+1:((cellColumn+1)*cellDimension));

gradDir = imagePatchGradDir((cellRow*cellDimension)+1:((cellRow+1)*cellDimension),

(cellColumn*cellDimension)+1:((cellColumn+1)*cellDimension));

% Histogram array

histo = zeros(1,8);

for i=1:cellDimension

for j=1:cellDimension

% Get mag and dir

dir = gradDir(i,j);

mag = gradMag(i,j);

%%%% Pick histogram bin based on dir (not shown for space reasons)

% Add to bin

histo(histoIndex) = histo(histoIndex) + (mag);

end

end

% Add histogram to feature

feature = [feature histo];

end

end

% Normalize feature, clamp, renormaliz

feature = normalize(feature);

feature(feature>threshold) = threshold;

feature = normalize(feature);

% Add to features

features = [features; feature];

Feature Match

for i=1:size(features1,1)

% Get feature from features1

refFeature = features1(i,:);

% Hold distances

distances = [];

% Get distance to each feature in features2

for j=1:size(features2,1)

testFeature = features2(j,:);

featureDist = sqrt(sum((refFeature - testFeature) .^ 2));

if isnan(featureDist)

featureDist = 0;

end

distances = [distances; featureDist];

end

% Sort from lowest distance to highest

[distances, indecies] = sort(distances, 'ascend');

% Confidences are the inverse ratio of the two distances

confidence = 1/(distances(1)/distances(2));

% Add match to list of matches

match = [i indecies(1)];

matches = [matches; match];

% Add confidence

confidences = [confidences; confidence];

end

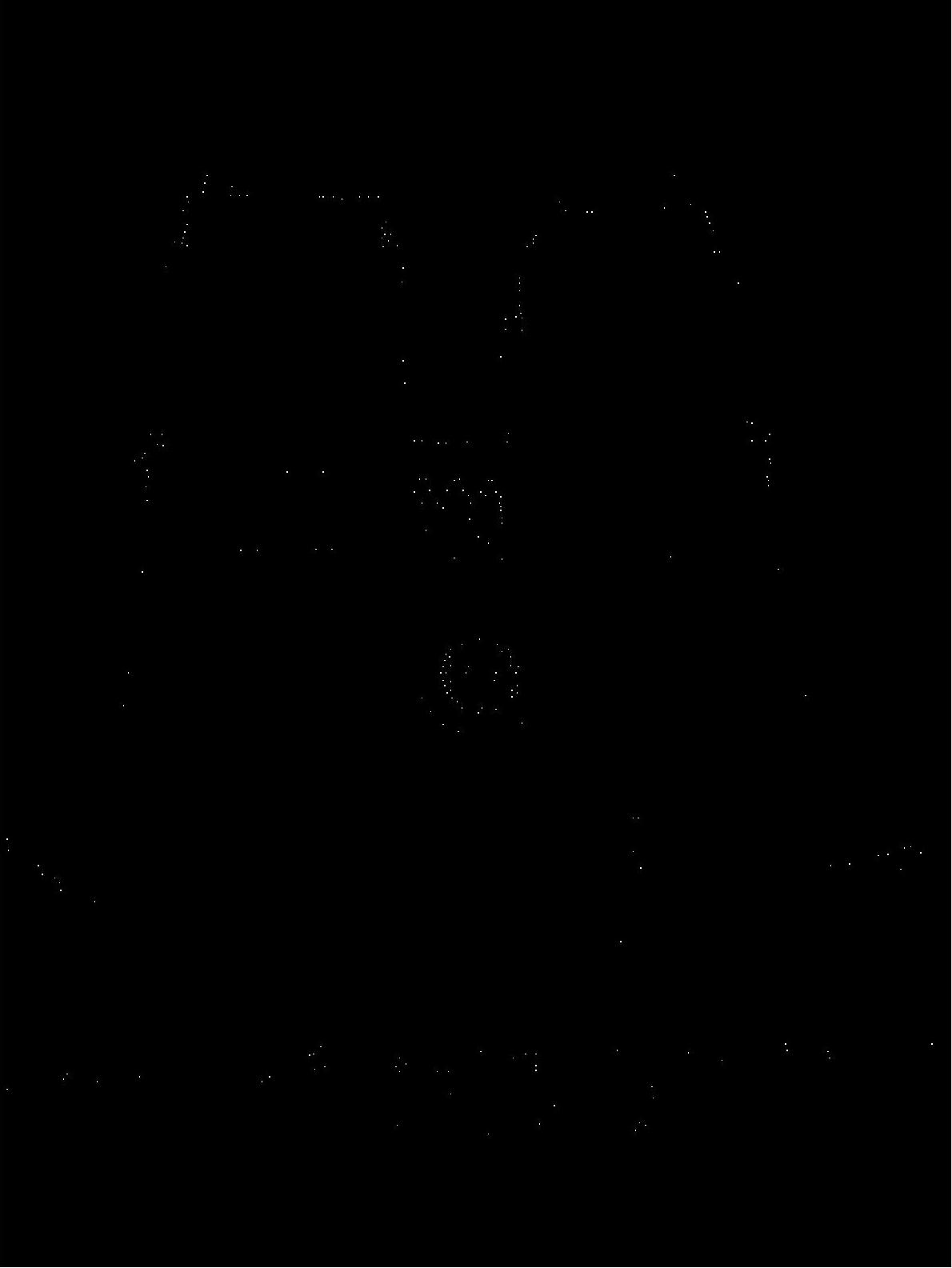

Harris Corner Detector Results

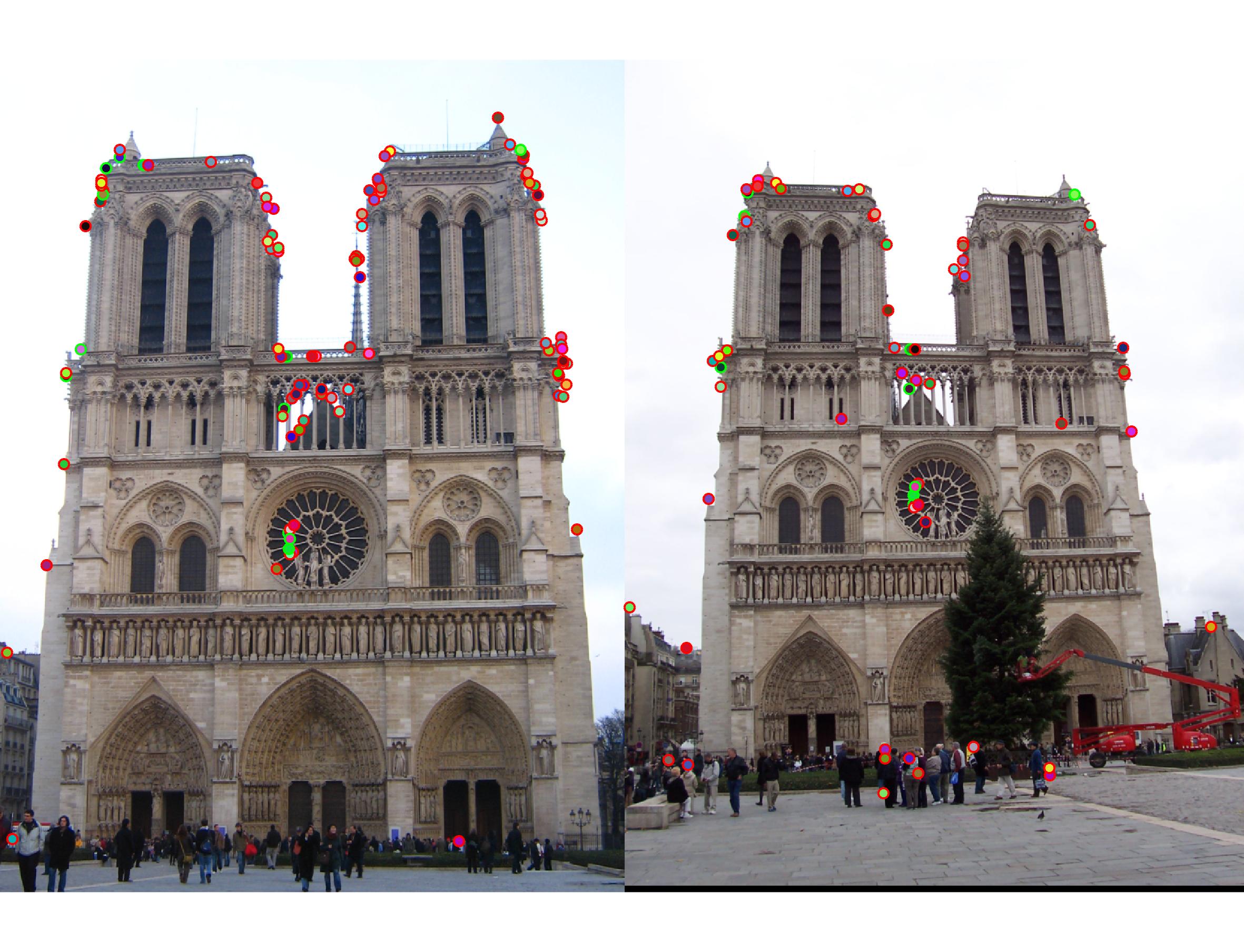

SIFT Feature Descriptors and Feature Matching with Cheat Points

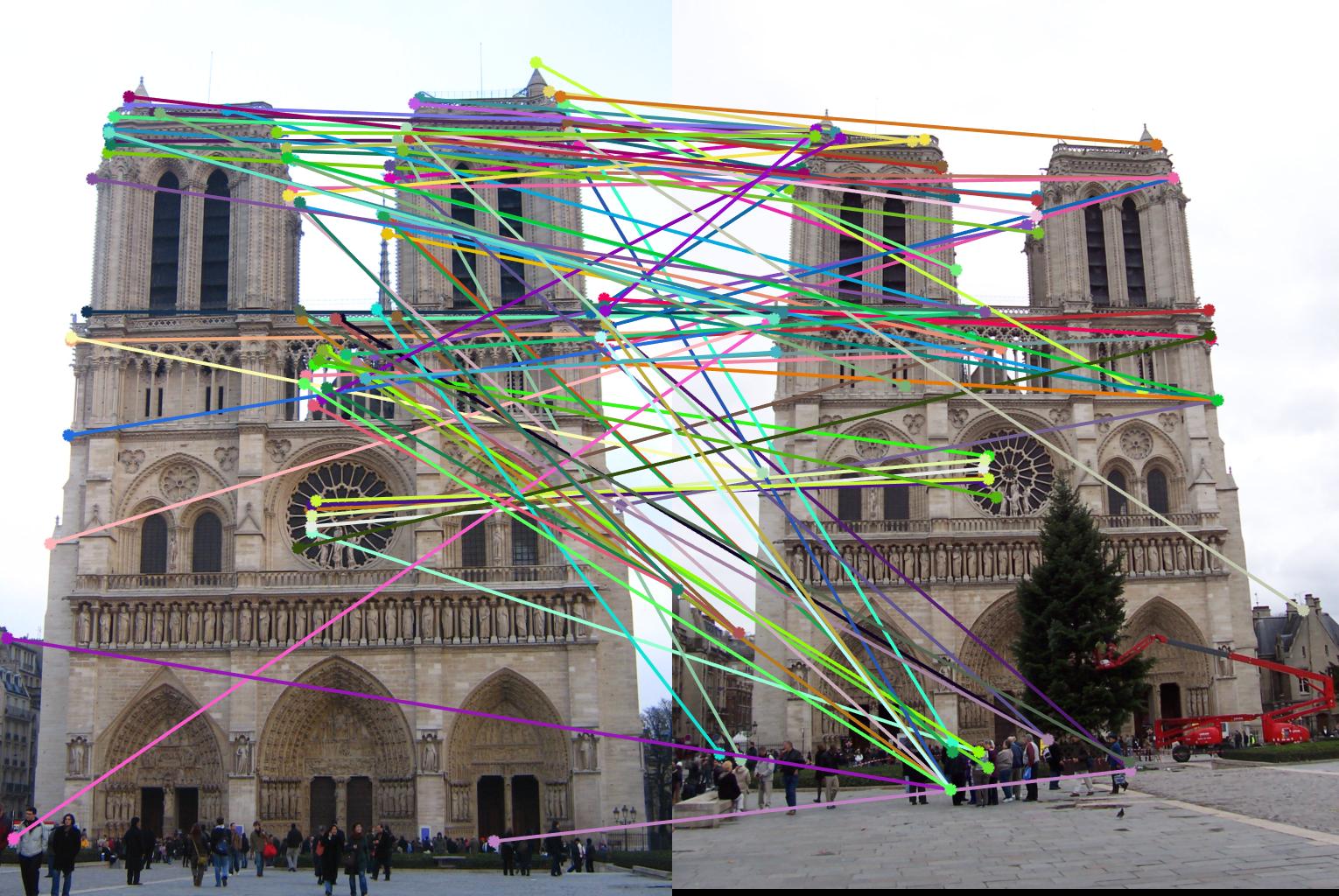

All together

Final Thoughts and Results

With cheat points and using my own of SIFT and feature point I got 72% accuracy. This tells me that these parts of my code worked great. When I implemented my Harris Corner Detector, my accuracy dropped to about 12%. Upon examining the results of my corner detector, the corners looked very "cornery" and yet, features were matched very incorrectly. I could never figure out why this was the case. Each individual part worked correctly, but all together they did not work.